Machine Learning in Robotics: Enhancing Autonomy and Decision-Making

The combination of ML with robotics has greatly changed intelligent automation. Adding Machine Learning (ML) to robotics has greatly improved intelligent automation. Traditional robots followed predetermined rules, but robots with ML technology can detect their environment, pick up data and make their own decisions. Thanks to this change, robots now respond to changing circumstances which boosts their efficiency and what they can do. Advancements in sensors are helping factories, hospitals, warehouses and farms greatly. Robots using machine learning progress through experience, complete complicated jobs and adjust their responses to new challenges. As a result, robots can now do more on their own, make better decisions and be used more widely in the real world.

The Role of Machine Learning in Robotics

1. Perception and Sensing

Robots depend on different sensors that help them communicate with the world around them. Such sensors have cameras for sight, LiDAR for perception of space and distance, ultrasonic sensors for noticing nearby objects and Inertial Measurement Units (IMUs) for finding movement and bearing. By using ML algorithms, data acquired from sensors can be given meaning. Making use of computer vision by means of ML, robots can detect, recognize and sort out objects almost instantly, based on what they see. With deep learning models, robots are designed to recognize humans, vehicles and things that may cause obstacles in a crowded setting. With the help of sensor fusion, data from several sensors is blended to make the environmental model more reliable and complete. As a result, robots can understand their environment, prevent accidents and handle tough spaces with ease and safety. To function well in changing surroundings, autonomous robots depend on reliable perception.

2. Decision-Making and Autonomy

Using Machine Learning, robots are enabled to choose smart actions on their own. Regular robots are bound by set tasks, but ML-empowered robots can review current information and react to changes in the environment. When asked to do this problem, Reinforcement Learning (RL) gives robots a chance to discover how to act successfully by trying various options and being encouraged or discouraged along the way. Thanks to learning as they work, robots get better with time, manage problems they haven’t seen and do complex activities with only a little human help. Therefore, ML helps robots become more independent, so they can be useful in logistics, healthcare and manufacturing.

3. Adaptability and Learning

ML improves how a robot responds to various changes in its surroundings. Unlike traditional robots which only do what they are programmed to do, ML-powered robots can adapt their actions over time. Because conditions may change unexpectedly, programmers make robots adaptable.

Autonomous cars and healthcare robots use

ML technology to react to what is happening on the road and in hospitals in real time. Being taught in supervised learning and also using reinforcement learning, robots become better each time they interact. They can identify trends, guess what’s likely to happen and adjust their behaviors to do better. Because they keep learning and adapting, they can manage serious, real-life problems mostly on their own. So, ML-driven robots are both more productive and better able to work in changing conditions in fields such as transportation, healthcare, agriculture and customer service.

Applications of Machine Learning in Robotics

1. Autonomous Vehicles

The technology behind autonomous vehicles such as self-driving cars and drones, relies on Machine Learning (ML). They depend on LiDAR, radar and cameras for real-time data regarding their surroundings. With the data, ML algorithms spot obstacles, identify traffic markers, predict what other road users will do and determine the proper routes to drive.Vehicles are able to react quickly and wisely which improves their safety and guiding abilities. Innovative approaches include “social sensitivity” models in their methods. The models guide vehicles to prevent harm to vulnerable road users in case of emergencies.

2. Industrial Automation

Industrial machines are now able to do many new advanced tasks thanks to ML-based robots. Machines powered by ML in manufacturing and logistics learn from their environment and improve their processes continually. As an illustration, warehouse robots use previous movement data to improve their picking and respond to different inventory amounts. Being capable of learning helps prevent breaks, works efficiently and requires less human supervision. Therefore, companies can expect better productivity, secure operations and lower expenses.

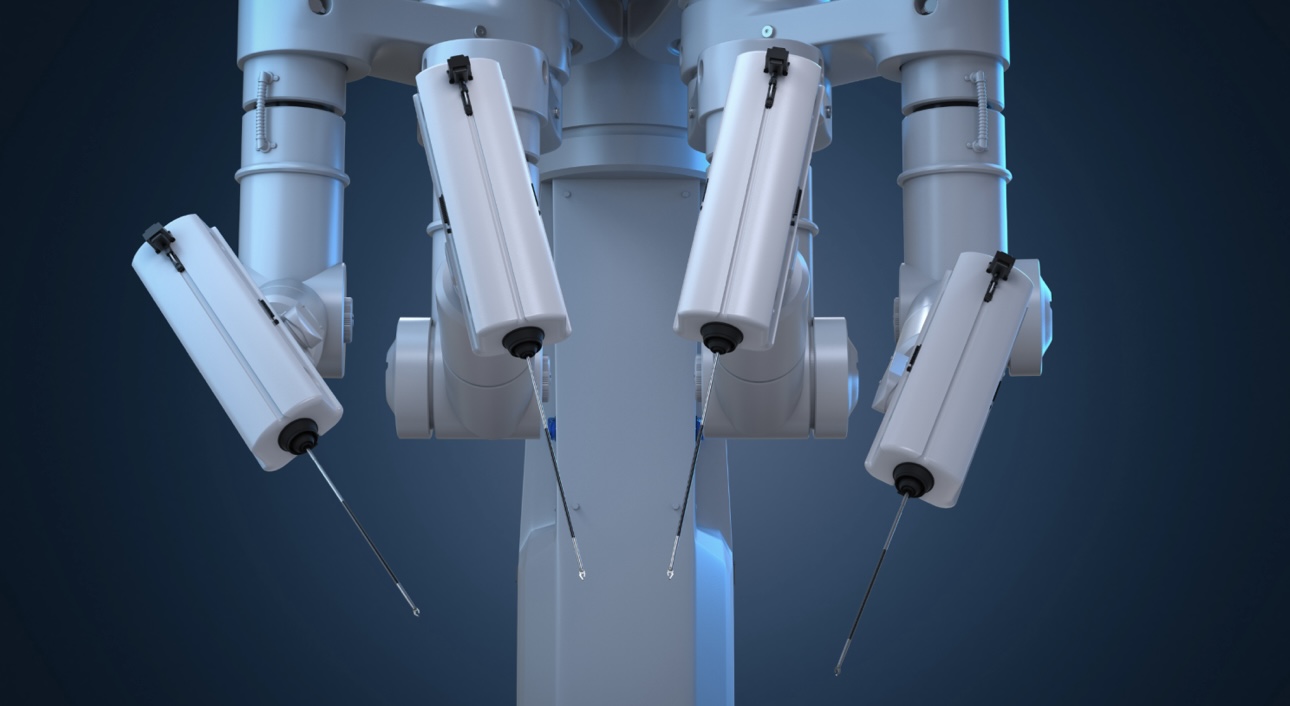

3. Healthcare Robotics

ML is making healthcare robotics more precise, adaptable to differing needs and efficient overall. With the help of ML, surgical robots study images and data and enable surgeons to perform careful, minimally invasive operations. As a result, you recover more quickly and with fewer problems. By using ML, robots in medical settings can measure vital signs, assist with everyday tasks and react wisely to patients. Rehabilitation robots use machine learning to change therapy plans according to each person’s progress. By using such new technologies, healthcare is being made more specific and approachable which helps both patients and doctors.

New Whitepaper Coming Soon:

AI in Automation – Transforming Industry in 2025 and Beyond

AI is no longer a promise for tomorrow—it’s driving measurable impact across manufacturing, logistics, robotics, and supply chains today. From predictive maintenance to generative design, AI technologies are optimizing operations, reducing downtime, and enabling smarter, faster decisions across the industrial landscape. In this newly expanded whitepaper, AI in Automation: Transforming Industry in 2025 and Beyond, the Association for Advancing Automation (A3) provides an in-depth roadmap to understanding, evaluating, and implementing AI in real-world industrial environments.

AI is no longer a promise for tomorrow—it’s driving measurable impact across manufacturing, logistics, robotics, and supply chains today. From predictive maintenance to generative design, AI technologies are optimizing operations, reducing downtime, and enabling smarter, faster decisions across the industrial landscape. In this newly expanded whitepaper, AI in Automation: Transforming Industry in 2025 and Beyond, the Association for Advancing Automation (A3) provides an in-depth roadmap to understanding, evaluating, and implementing AI in real-world industrial environments.

4. Perception and Object Recognition

Robots that work with ML are now capable of seeing and understanding what is happening around them just like humans are. Because of computer vision and deep learning, these robots use data from cameras, sensors and LiDAR to recognize and categorize items as they see them. In autonomous vehicles, recognizing traffic signs, people or dangers is crucial for the robot’s performance. In manufacturing, robots rely on ML to examine goods for defects very accurately. Because robots can see their surroundings, they can work more outdoors themselves and perform with improved accuracy in various fields.

5. Human-Robot Interaction (HRI)

Because of Machine Learning, robots are able to respond more effectively to people’s actions. They can spot facial emotions and listen to voice commands as well as notice when a user is emotional. Therefore, robots can be more useful for activities such as helping customers, looking after people and teaching, where they come into close contact with people. Social intelligence allows these robots to gain new knowledge by interacting regularly which makes them respond more smoothly and rely less on close programming. As a consequence, working with robots becomes easier and more rewarding for humans.

6. Learning from Demonstration

Now, robots can simply observe a person carrying out a task and use imitation learning to carry it out themselves. It allows us to create robots that can handle detailed, practical jobs in construction, cooking and caregiving. Observing people perform tasks allows robots to improve themselves continuously and, as a result, become adaptable and economical for many uses.

Challenges and Future Directions

1. The Accuracy of Data and Its Accessibility

ML’s achievements in robotics are strongly related to the amount and quality of data it trains with. It is usually very difficult and costly to collect large, excellent data for use in robotics. Collecting data for all different types and situations in which robots are used is challenging. Researchers are now using innovations, including the generation of synthetic data, to improve and enrich the real information they have. A good approach is transfer learning which helps robots use previous lessons to address other related tasks, so they collect only the necessary data. They look to make ML models strong and accurate even when little data is captured originally.

2. Real-Time Processing

Often, robots are found in places, like self-driving vehicles and automated industries, where decisions need to happen quickly. These algorithms are required to process what goes on around them and perform actions quickly to adapt to changes in the environment. Because models in machine learning, particularly deep learning, can be computationally demanding, this leads to many issues.For this reason, new GPUs and devices that carry out computations nearby in edge computing are being created. Researchers are also working on ways to improve robot algorithms so they use less computing power, yet remain accurate, to help robots handle urgent situations safely.

3. Ethical and Safety Concerns

The more autonomous robots become, the more attention must be given to safety and ethics. Autonomous systems are expected to take actions that agree with human beliefs, safety laws and regulations. In accidents, self-driving cars would have to decide whether to protect its occupants or anyone outside the vehicle. Ethics and safety measures are currently being added to ML designs to support robot decision-making by researchers. By making their work visible, ensuring accountability and carrying out intensive testing, autonomous robots can be trusted to behave well in difficult situations.

4. Human-Robot Interaction

To collaborate smoothly, humans and robots need to clearly understand each other in areas where they are near one another. Machine Learning helps improve human-robot interaction by allowing robots to get signals from human speech, gestures, facial expressions and emotions. By using NLP from ML, robots can communicate with people verbally in a better and faster way. Computer vision technology aids robots in noticing and interpreting people’s hand movements or facial gestures. Making human-robot communication more intuitive is one of the main areas of robotic research. With these interfaces, people can use touch, speak and access AR to make interacting with technology much simpler. In addition, when robots improve their emotional responses and abilities to match human behaviors, trust, collaboration and overall satisfaction among users rise in many environments, like our homes, hospitals and offices.

Conclusion

Machine Learning is making robots smarter and more able to make decisions on their own. ML-driven robots, due to their recognizing, learning and adapting, can go places and complete tasks where people are nearby. Despite the limitations in data, in real-time processing and ethical questions, ongoing work and improving robotic technology are making robots wiser, safer and more people-friendly. Asinnovations in robotics develop, the future suggests that these robots will help us, improving productivity, safety and new ideas in many fields.

Leave a Reply