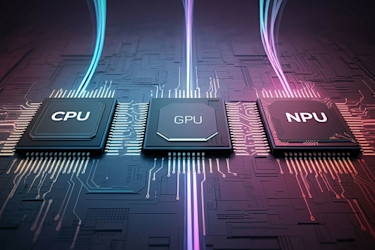

NPUs vs. GPUs for Edge AI: Choosing the Right AI Accelerator

Deploying artificial intelligence (AI) at the edge presents unique hardware hurdles. While powerful graphics processing units (GPUs) are common in development, real-world use cases often face form factor, power consumption, environmental, and budget constraints. These limitations can make discrete graphics cards (sometimes abbreviated dGPUs) a less-than-ideal solution compared to more energy efficient options.

However, achieving your edge AI acceleration goals is still possible. Let's explore the evolving landscape of NPU vs. GPU for edge AI, including integrated GPUs (iGPUs), neural processing units (NPUs), and expansion cards to examine how they address diverse industrial AI inference and machine learning needs.

Need help navigating edge AI hardware? Our expert AI team provides tailored consulting for your specific tasks.

Learn more about AI Consulting

The dynamic landscape of edge AI acceleration

General-purpose GPUs, despite their high-performance potential, often struggle in large-scale edge deployments due to energy efficiency limitations, sensitivity to harsh conditions (dust, vibration), and significant size and cost implications. Fortunately, the field of AI accelerator technology for the edge is rapidly innovating. For distributed architectures beyond data centers or lightweight AI applications demanding balanced data processing performance, cost, and power efficiency, careful evaluation of your specific requirements and workloads is crucial. Utilizing specialized processors like NPUs within robust, fanless industrial computers enhances reliability in extreme conditions and can optimize hardware expenses for various computing tasks.

Compared to standalone edge AI platforms, integrating accelerators like AI-specific specialized processors, neural processing units (NPUs), and even MXM accelerators offers targeted computational power precisely where it's needed for AI workloads. Evaluating the total system cost and the software frameworks ecosystem is vital when selecting an AI accelerator. While AI expansion cards boost performance, they can significantly increase system cost. Often, integrated solutions like iGPUs and NPUs, or dedicated platforms like NVIDIA Jetson, provide a more streamlined and cost-effective approach for many use cases. Let's examine each option in detail, considering the NPU vs. GPU and CPU trade-offs.

NPU-powered AI: Efficient on-chip acceleration for neural networks

Historically, powerful central processing units (CPUs) and GPUs dominated AI workloads and algorithms, thanks to their broad software compatibility. But integrated graphics and acceleration are advancing, with modern processors and SoCs offering valuable on-board industrial AI inference and machine learning capabilities.

The key innovation lies in integrated NPUs such as Intel® AI Boost (14th Gen Core™ Ultra) and AMD XDNA (Ryzen 7000/8000 series). These dedicated co-processors feature specialized circuitry for matrix multiplication and tensor operations essential for neural networks, accelerating deep learning directly on the processor itself. While their performance is generally suited for background image processing and audio processing and CPU offloading, they provide a low latency, power-efficient solution for light to moderate edge AI inference, especially in space-constrained or rugged environments with less power.

For example, NPUs excel at specific tasks like real-time object detection in low-resolution video or voice recognition, often delivering several TOPs of AI performance, making them popular in AI-enabled consumer electronics like smartphones and IoT devices. This highlights a key aspect of NPU vs. GPU for specific edge computing use cases.

iGPUs: The often overlooked AI resource for parallel processing

Modern integrated GPUs (iGPUs) like Intel Arc and AMD Radeon AI are surprisingly capable for industrial AI inference and even some model training. Their parallel processing architecture allows them to handle a significant range of AI tasks, often exceeding common perceptions in general-purpose computing. For AI applications requiring moderate AI performance, leveraging the iGPU can be a cost-effective and power-efficient approach.

These iGPUs represent a substantial upgrade from basic graphics cards, designed for parallel computing and offering a balance of performance and power efficiency. For instance, Intel Arc GPUs can achieve tens of TOPs, suitable for video analytics, image recognition, image processing, video editing, and even light machine learning inference. These advancements make them a viable option when an NPU isn't sufficient, but a dedicated GPU's power, space, or cost isn't feasible. The performance difference between NPU vs. GPU becomes clearer here, especially when considering workloads.

AI expansion cards: Targeted performance with specialized processors

While NPUs and iGPUs offer notable edge AI performance gains, dedicated AI expansion cards featuring specialized processors can further enhance performance for specific tasks and ai workloads. M.2 modules, like the Hailo-8 edge AI processor, provide a convenient way to add considerable computational power. Compared to Google’s TPU (tensor processing unit), the Hailo-8 offers significantly higher performance (26 TOPs vs. 4 TOPs) with similar power consumption.

OnLogic's ML100G-56, for example, integrates Hailo-8 cards, adding impressive AI processing capabilities to an ultra-compact industrial computing platform suitable for diverse deployments.

July 22-23, 2025

Hyatt Regency, Minneapolis, MN

MXM (Mobile PCI Express Module) accelerators, sometimes incorporating NVIDIA RTX technology, are also emerging in the industrial computing sector. These compact, removable GPU modules are designed for space-constrained systems, enabling a significant increase in graphics rendering and AI processing power without the footprint of a full-sized PCIe card, making them ideal for rugged and embedded AI applications requiring enhanced edge AI acceleration.

Nvidia Jetson: A versatile high-performance solution for complex AI models

For workloads that exceed the capabilities of NPUs, iGPUs, or AI expansion cards, the Nvidia Jetson family offers a robust and adaptable solution for industrial AI inference and complex deep learning models. With a broad performance spectrum and a mature software ecosystem, Jetson platforms are well-suited for demanding AI applications like deep learning models, generative AI, graphics rendering, large language models (LLMs), and natural language processing. Importantly, they also address the ruggedization challenges associated with traditional GPUs in edge computing deployments.

The NVIDIA Jetson range extends from the entry-level Jetson Nano to the high-performance Jetson Orin NX and AGX Orin. The Orin AGX can deliver significant computational power, making it suitable for complex AI models and demanding applications such as autonomous vehicles, robotics, and advanced video analytics. Jetson also features a unified software stack, simplifying the deployment of AI models across different Jetson platforms. This offers a powerful alternative in the NPU vs. GPU vs. CPU decision for demanding AI workloads.

Toolkit compatibility: Unleashing the hardware potential for AI applications

To fully leverage these AI accelerator options for industrial AI inference, the appropriate software tools and frameworks are essential. These tools generally follow a three-stage process:

- Model input: Utilizing a trained model from a supported training framework.

- Optimization: Optimizing and quantizing the model for the specific target hardware to avoid bottlenecks.

- Deployment: Deploying the prepared model (Runtime) on the target operating systems.

Each vendor of integrated accelerator technologies provides a list of supported frameworks for hardware acceleration. Here’s a brief overview, though support can vary across different operating systems:

|

Target Hardware |

Supported Frameworks |

|

Intel Arc Integrated Graphics |

OpenVINO, WindowsML/DirectML, ONNX RT, WebGPU |

|

Intel AI Boost NPU |

OpenVINO, WindowsML/DirectML, ONNX RT |

|

AMD Radeon AI Integrated Graphics |

ONNX RT via AMD Vitis Execution Provider |

|

AMD XDNA NPU |

ONNX RT via AMD Vitis Execution Provider |

|

NVIDIA GPUs (RTX, Tensor Cores) |

CUDA, TensorFlow, PyTorch, and others |

|

NVIDIA Jetson |

CUDA, TensorFlow, PyTorch, and others |

|

Google TPU |

TensorFlow |

|

Qualcomm Snapdragon |

Open-source and proprietary frameworks for mobile devices |

|

Apple Silicon Neural Engine (in laptops, etc.) |

Core ML |

Always consult the vendor's latest documentation for the most up-to-date frameworks support and compatibility with different operating systems.

Making the right choice for your edge AI deployment

It's vital to recognize that iGPUs, NPUs, and expansion cards are not universally applicable solutions for all computing tasks. They may not always match the raw computational power of a high-performance discrete GPU, emphasizing the importance of optimization and benchmarking for successful edge AI implementations and avoiding bottlenecks in your data processing pipelines.

Selecting the optimal hardware for your industrial AI inference needs and machine learning tasks can be a complex process. OnLogic offers a comprehensive range of rugged and industrial systems, coupled with the expertise to help you translate the potential of AI into tangible business value for your AI applications. Contact our AI team today to discuss the ideal acceleration solution for your specific project and unlock the full computational power of your edge devices.

.png)

Leave a Reply